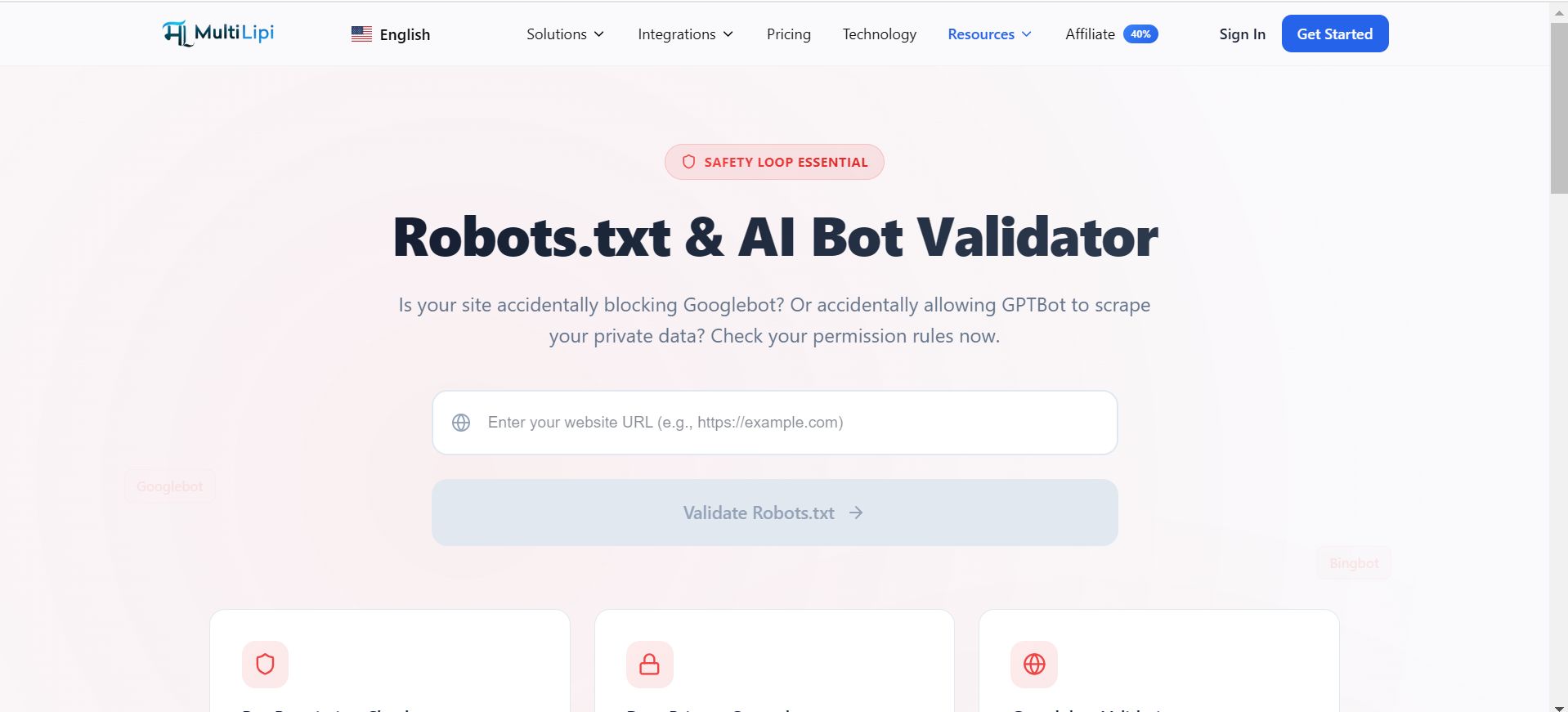

Audit your crawler permissions and control AI data scraping—at no cost.

In the age of IA generativa , your robots.txt file is the most important security document on your server. It is the gatekeeper that tells Googlebot "Welcome" and tells GPTBot (OpenAI) or CCBot (Common Crawl) whether they are allowed to ingest your proprietary content to train their models.

The MultiLipi Robots.txt Validator is a free engineering utility designed to audit your permission rules. It ensures you aren't accidentally blocking SEO traffic while verifying your stance on AI scraping agents.

The "Safety Loop" Essential

SEO Visibility vs. AI Privacy.

A single syntax error in this file can de-index your entire website from Google. Conversely, a missing rule can allow AI companies to scrape your entire blog archive without compensation.

The SEO Risk

Blocking Googlebot or Bingbot destroys your traffic.

The AI Risk

Allowing GPTBot or ClaudeBot means your content becomes training data.

The Balance

Our tool validates that your "Allow" and "Disallow" directives are syntactically correct and targeting the specific agents you intend to manage.

El Protocolo de Auditoría

How to validate your gatekeeper.

Don't assume your permissions are correct. Verify them against live crawler standards.

Accede a la herramienta gratuita

Navigate to the Robots.txt Validator.

Punto final de entrada

Enter your root domain (e.g., https://example.com).

Escaneo de ejecución

Click the Validate Robots.txt button.

Lógica de la revisión

Examine Syntax Check, Bot-Specific Analysis, and Reachability.

Lógica de la reseña:

Syntax Check: Flags invalid wildcards or path errors

Bot-Specific Analysis: Specifically checks permissions for major agents like Googlebot, GPTBot, Bingbot, and CCBot

Reachability: Confirms the file is accessible and returning a 200 OK status code

Controlling the Knowledge Graph

Decide who learns from you.

If you are a premium publisher or SaaS platform, you may want to block generic AI scrapers while keeping search engines active.

Escenario

You want to appear in Google Search results but don't want ChatGPT to recite your paywalled articles for free.

Solution

Use the validator to ensure your User-agent: GPTBot Disallow: / rule is correctly implemented and distinct from your User-agent: * rules.

Multilingual Sitemaps

Connecting your infrastructure.

Your robots.txt is also the map room for your crawlers. It should explicitly link to your XML Sitemap.

The Check

Our tool verifies that a Sitemap: https://yoursite.com/sitemap.xml directive exists.

The Global Impact

This is critical for discovering your localized sub-directories (e.g., /Fr/ , /es/ ). If the crawler can't find the sitemap via robots.txt, your deep-level translated pages may remain undiscovered.